Research in Form – AI-Aided Design

with Vera van der Burg

Working with researcher & artist Vera van der Burg, we examined how an AI can help in the design process. Specifically in exploring shapes on the edge of nature and technology.

What new forms can emerge, in a world that borrows and looks at nature as a continuous source of inspiration?

We explored this through the lens of an AI.

Recent developments show how Artificial Intelligence is increasingly taking over human tasks. It has conversations, plays games, generates artworks, and perceives objects in images. This rise of AI applications makes it interesting to think about what this technology could mean for the future of design practice. A design process is complex, as designers raise questions, challenge existing solutions and beliefs, generate new ideas, and work in several media during the scope of such a process. This process is hardly assisted by computers when a design space still has to be explored, and ideas must be formed - due to these non-linear, open-ended characteristics that design processes can have.

Would there be a potential for an AI to fill up this space? As AI has proven itself that it can be successful in generations of non-obvious connections and solutions, it is exciting to ask what actual role an AI could have in a creative design process.

Whereas most roles of AI are aimed to substitute and replace human tasks, this project takes a supportive approach and sees potential in AI becoming a collaborative partner. An AI that a designer can have a creative interaction with, appreciating it as a partner that supports and inspires, as well as challenges and surprises.

But how should a creative dialogue of co-creation and collaboration between a designer and an AI be set up? How could an AI be incorporated into a design process and enhance it by providing a new perspective, novel insight, and alternative directions instead of breaking up a creative flow? And additionally, what could it mean for a designer to design with a non-human intelligence?

We started at the very early stage of a creative process, one where there is no set plan yet, just an intention to explore creatively and find inspiration. A first framework was set up; we wanted to find inspiration from nature to generate ideas for new technologies. Additionally, a specific type of AI was selected; a Generative Adversarial Network (GAN). During training, a GAN finds a pattern in a dataset of images and recreates this pattern by generating new images that are similar - but not the same. In short, this network can generate new imagery based on a dataset it was trained on beforehand.

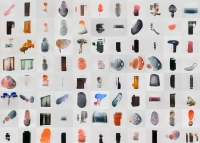

We selected a range of images, 250 mushrooms and 250 technological devices as our training dataset to start the exploration. These images were carefully curated and prepared for the AI to process; the background was cut off, focusing on the object. Then, this dataset was used for training the network.

The GAN provided us with 500 generations that were abstract and functionless shapes but clearly identified as objects. The generations had recognizable elements. However, it had provided us with 500 new shapes from which we did not know yet what they were.

The GAN functioned as a lens that provided a new perspective on the training dataset that we carefully curated and prepared. It could be said that this GAN became a tool for exploring a specific style or aesthetic that was inspired by both nature and technology, offering inspiration for the designer to work with but also revealing a new insight about the training dataset itself. The GAN picks up on elements in this training dataset, like color, texture or shape, and puts those elements together to create a new image. Then, it is up to the designer how to incorporate this image into a practice.

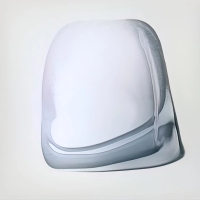

The generated images seem to be without function. Functionality is impossible for a GAN to understand and recreate as it can only create a 2D, flat image. It is up to the designer to ‘see’ or attribute functionality to the generated images. To see if we could gradually attribute functionality, we transformed the generated images into a 3D shape - we gave it depth, texture, and more details, still without a purpose, goal, or explicit function.

What does a creative collaboration between an AI and a designer signify? What roles do both actors take, and what new ones could be assigned? In this case scenario, the designer first selects data. The AI generates new images based on this data. The designer interprets these generations and recreates them in a 3D world.

As humans, we can think of possible uses for these objects based on our own interpretations and ideas about the world. But what if this part, assigning function, was done by the AI? What if, based on this process, the AI is supposed to give meaning to the abstract objects the designer created, offering a new perspective and insight?

We added another AI system, an image classifier, to identify potential uses associated with our generated forms as a speculative tool. What does this do to the understanding of the shapes? And then, how could this be used in, again, a next step?

This project showcases the emergence of ‘new’ interactions between a designer and an AI. Aiming to identify what choices or influences each actor makes or has during such a process.